Piloting AI's Next Hardware Innovations

- RCD

- Nov 25, 2024

- 12 min read

Updated: Jan 13, 2025

tl;dr

No one in the Tech hardware supply chain can ignore the AI opportunity even if the outlook past the next two years is uncertain.

AI differs from past Tech hardware growth cycles. Innovation no longer depends on Moore’s Law, and the market structure is more consolidated.

The next few generations of AI hardware will increasingly depend on innovations occurring away from the GPU silicon. They will rely on interconnects, power delivery, and thermal management. It is an important opportunity for the electronics supply chain.

However, it is challenging for supply chain organizations to capture value. Their business model is to deliver products and transact on their component manufacturing expertise. The competition for that expertise is fierce in an environment where Nvidia and TSMC are gatekeepers. It puts an enormous drag on the investment thesis, even if the opportunity is large.

Ultimately, organizations must build a moat through products and transactions that deliver intellectual property (IP). One way to do that is to integrate across supply chain levels. It's by no means easy. However, it is one of the best ways to move from a low-differentiation "value share" to a high-differentiation "value creation" business. Some examples from organizations attempting to do this are provided.

Can't Miss Out

No one honest about AI should forecast past the next two years. There are too many unknowns. At one extreme, evangelists look at exponential scaling for large language models (LLMs) and infer a horse race. For these folks, Capex spending is a massive bet to get to market fast and reap the profits before anyone else. The mantra is clear, "it's better to overinvest rather than underinvest." Nvidia's financial results have indeed validated their view.

At the other extreme, contrarians are fretting over the investment costs, with dot-com comparisons never too far from memory. Avoiding a bubble depends on how fast the downstream AI ecosystem can grow profits relative to hardware investments. No one has a good grasp of this dynamic yet. The only data point publicly available is from OpenAI's leaked financial documents. In those documents, the company projected it would lose $44Bn by 2028 and not turn a profit until 2029 when revenues reach $100Bn. It is a lot of invested capital. Investors will rush for the exits if credible reports (maybe this one? or even this one?) come out that the technology or end markets aren't living up to the hype.

Regardless of what the crystal ball tells you, every company in the Tech hardware supply chain has to have an AI strategy. No executive worth their salt can ignore the extra $200Bn+ that will be added to the electronics industry next year, even if possibly at the peak of the hype cycle. That influx from Capex spending will trickle into every part of the supply chain.

It should be easier to capture value when the whole sector is racing to deliver bleeding-edge equipment to customers investing fortunes. Things are moving fast. However, the AI growth wave differs from past Tech hardware growth cycles. Innovation is coming from different places, and the market structure is more consolidated. Understanding these distinctions will be necessary for any organization planning its AI strategy.

Moore's Law Isn't Around to Help Anymore

There is a common theme among venture capitalists and AI evangelists comparing the current growth boom to previous growth cycles like the PC in the 1980s and the smartphone in the 2000s. It's an attractive comparison. But the analogy runs out of steam pretty quickly.

Moore's law powered both the PC and the smartphone growth cycles. Product lifecycles churned every 18 months with new features and capabilities. The latest designs regenerated demand and helped support growth as new use cases and new users adopted the technology. Fast forward to today, Moore's law has slowed considerably. It is still important. However, it is more challenging (and expensive) to squeeze more performance per unit area of silicon (FLOPS/area, $/area, and power/area).

There is always the possibility of revolutionary architectural innovation. Novel computing architectures like neuromorphic and new energy-efficient computing efforts are possible. Quantization (i.e., lower precision number representation) can also improve the Si area efficiency. But it's more of a wild card than a trajectory in the technology roadmaps. (Although, venture capital is usually drawn to wild cards.)

For the existing GPU matrix-multiply processors, a large chunk of the innovation for the next few years (in between fab nodes) will focus on improving the utilization of the silicon. Technologies that improve utilization will allow GPUs to perform close to their theoretical performance limits. Calculating the value of improved utilization is straightforward and much of the heavy lifting for this analysis has already been done through really great discussions on social media, blogsphere, substacks, and conference presentations over the past few years.

Software utilization, cluster efficiencies, memory bandwidth, and component reliability are all critical pathways for adding value. These innovations occur away from the GPU silicon. They depend on interconnects (including memory bandwidth), power delivery, and thermal management.

The problem with relying on these technologies is that they don't scale as nicely as Moore's Law once did. It has led to "innovation walls" that engineers have to deal with. There are interconnect and memory walls. We can also add energy and thermal walls.

Technology Roadmap

These walls set up the goalposts for technology roadmaps. Below is a running list that the firm has compiled. It is not exhaustive, and the time frames are by no means exact. Nor are we claiming any particular insight. Engineers involved in the day-to-day development already know where these technologies are heading.

Some of these technological developments are foundational. It's a good bet that the transition to liquid cooling will continue in subsequent generations of processors. However, some new technologies could have a lifespan of only one generation. The optical transceiver industry has understood this dynamic all too well in past product development cycles. And it may be living through the same dynamic again today with the debate over linear drive pluggables vs. co-packaged optics.

Innovation walls also point to opportunity. Absent any new AI architecture development, value creation in the next few generations of AI processing will depend on progress in power delivery, interconnects, and thermal management.

Startups have made many attempts to capture this value through chip design. AI chip startup MatX recently raised over $100M in funding for a new chip that, according to their website, excels at scaling out large clusters of AI servers because of an "excellent" new interconnect.

It is also an important opportunity for the "non-processor" Tech hardware supply chain. Much of the technology that delivers interconnects, power, and thermal management to the GPU processor comes from analog chip companies, fabricated part makers, substrate fabricators, and passive and interconnect component suppliers. However, it is challenging for these organizations to capture value, even if the demand from customers is high. We need to understand how these suppliers currently interact with the AI supply chain to explain why.

Value Accrues to the Dominant Suppliers

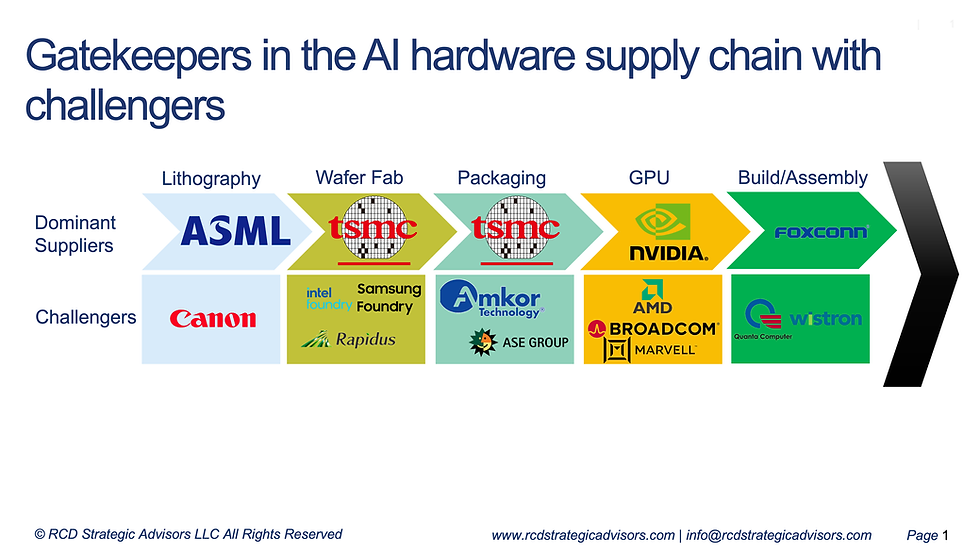

The other difference in today's AI wave versus previous hardware growth cycles is that there are fewer players in the value chain. It's easy to look back now and point to Wintel's dominance in the PC market or Apple's dominance in the smartphone sector. However, the market was widely contested in the early stages of these growth cycles. It was only after reaching the later stages did the "winners take all." That isn't the case for the new AI growth wave and the current state of the semiconductor industry. Today, the AI hardware supply chain has one dominant supplier at each stage (Nvidia, TSMC, and, for completeness, ASML and Foxconn). Value has accrued immediately to these big power players. Any new technology runs through these organizations.

The siloed supply chain structure has some advantages. Machine learning algorithms, use cases, and business models are changing fast, much faster than hardware development can keep up. With only one dominant supplier at each stage, it is easier to pass along demand signals. That quick communication can help avoid the dreaded bullwhip effect when "podcast bros" go rogue. It has also helped coordinate the rapid design changes for critical bottleneck processes (like advanced IC packaging and the changing allocations between CoWoS-S and CoWoS-L to accommodate revisions to Nvidia's product family).

The result of this supply chain structure, though, is that it limits how new technology accrues value. Most of the value is captured by the dominant players. They have the privilege of acting as gatekeepers because they own the channel. It gives them better bargaining leverage.

The next generation of AI innovation will likely depend on component makers who supply interconnects, power delivery, and thermal management. Component makers typically win the business by relying on preferred relationships and early engagement that give them a premium print position on the BOM. Their business model is to deliver products and transact on their component manufacturing expertise.

The problem with this approach is that the value accrued depends on the scarcity of that expertise. If there is no scarcity, there is limited competitive advantage. The value that does leak out of the main gatekeepers gets competed away quickly and evaporates to the cost of capital.

In their recent Q3 earnings, MPS, the lead supplier to NVidia for power delivery VRMs, suggested they would lose some business in the next few quarters as their customer, Nvidia, added second and third sources. MPS won 100% of the socket in the first few quarters. Even though MPS developed a robust early relationship, won print position, and executed flawlessly, they could still not win all of the business. Other suppliers were able to catch up quickly and shave some share of the value.

New entrants can plan to enter the supply chain as a reliable second or third source, biding their time until (if) the primary supplier fails. Or, they can cast their lots with challengers (Hyperscaler custom solutions, or emerging foundries) to the gatekeepers. In either case, the organization is competing for a share of the value allotted to the supply chain.

The leading edge for power delivery, thermal management, and interconnects is not an easy environment in which to innovate. R&D costs are high, timing is critical, and the technology changes could be fleeting. Success depends on your customers winning and/or if your competitors stumble. Adding to the risk is the real possibility that the end markets can bubble and crash. It puts an enormous drag on any investment thesis. Component makers' business models don't leave much room for making these high-risk technology bets. But with the AI growth wave, and the right business model, the payoffs may be worth it.

It's About the IP

As mentioned in a previous post, the power players often pick up the slack and do the heavy lifting to develop, seed, and nurture new technologies. It is why most Big Iron suppliers are vertically integrated. This is why Nvidia, for the most part, is driving the development of liquid cooling solutions, co-packaged optics, and power delivery.

Consortia, like the Open Compute Project, and standards groups like OIF, play an essential role in creating and organizing standards, common form factors, and reference designs. The leading AI equipment makers will undoubtedly leverage the work from these standards bodies. But consortia are like mirror images of distributors. Suppliers use distributors to find customers. Customers use consortia to find suppliers. It's a channel, not a way for companies to add value.

The startup ecosystem can help by absorbing the development risks with external capital and partnering with the power players. However, the exit strategy is almost always an acquisition by an existing player (i.e., JetCool was recently acquired by Flextronics). And value creation is usually limited by where the strategic acquirer sits in the supply chain.

External capital to reduce risk can also come from the government (i.e., the CHIPs Act and other regional subsidies). However, there are significant political risks with any strategy that depends on public funds.

For completeness, external capital can also come from adjacent industries. In this scenario, AI applications ride the coattails of other market sectors, like smartphones or automobiles. But Big Iron is at the bleeding edge. It is the coat tail, not the coat tail rider.

For a business model in the AI hardware supply chain to capture the most value from internal capitial at risk, component makers must deliver products and transact around the intellectual property (IP), not their process expertise. Licensing IP is one clear way to make the transaction explicit. However, licensing is generally not in the DNA of the Tech hardware supply chain because most of the know-how is related to manufacturing expertise.

Another way component makers can transact on IP is by embedding it inside of a material technology. Material solutions are typically “stickier” and more difficult to substitute. Component makers can also embed IP by integrating several components into modules to interact with each other in a way that adds value.

Although by no means easy, another way to achieve this interaction is to integrate across supply chain levels. Component suppliers can achieve this through vertical integration, but that is often too risky. A better approach is through a partnership or joint development. Some examples playing out in the industry are described below.

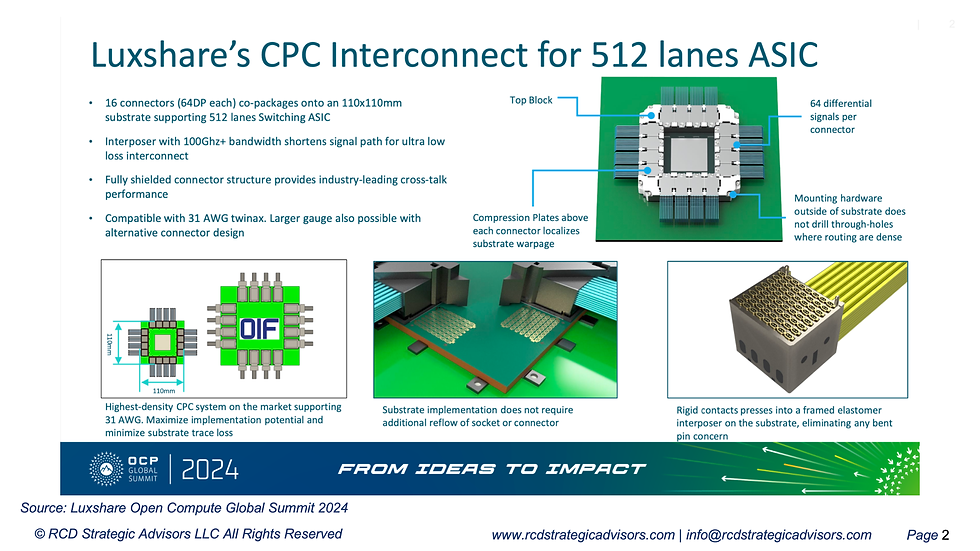

An Example of Co-Packaged Copper

At recent conferences, Luxshare has exhibited its Koolic co-packaged copper (CPC) assembly. One problem with CPC is that the bulky cables can warp the IC package. Luxshare engineers worked with a materials supplier to develop an elastomeric connector that mounts between the rigid contacts and the substrate. The elastomer provides enough elasticity to prevent the substrate from warping under the pressure of the connector.

CPC has been talked about for a while as a lower-cost alternative to co-packaged optics (CPO). Moreover, elastomeric connectors have a long history in high-performance computers. Both technologies aren't new by themselves, but the real value lies in combining them into one assembly and ensuring they work well together.

Luxshare engineers claim they have created a novel elastomer explicitly designed for this application, solving many reliability problems plaguing previous elastomeric connectors. If the link between the properties of this new elastomer, CPC reliability, and overall signal integrity is as strong as Luxshare suggests, the company could capture more value, especially if CPC technology wins a socket in the next generation of AI processors.

An Example of Passive Component Integration

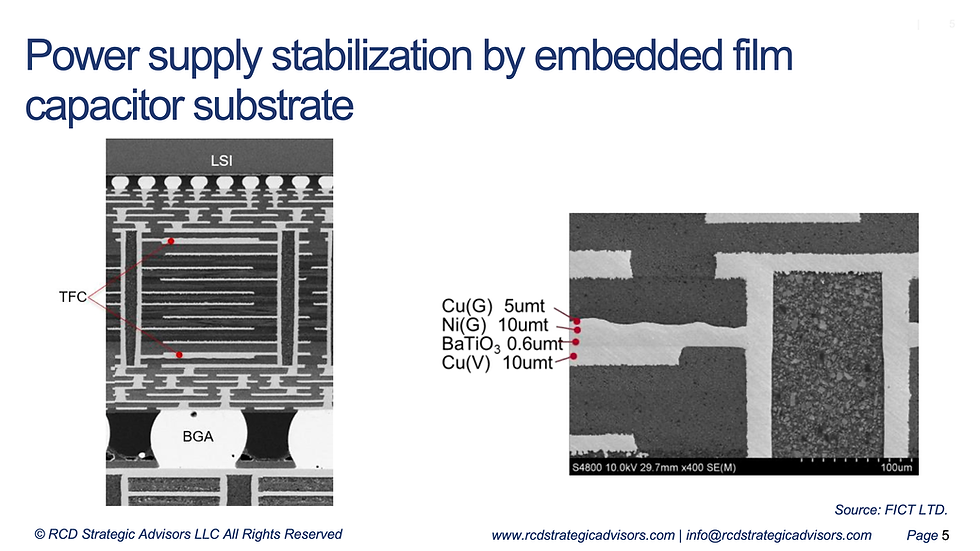

One of the biggest challenges in powering the next generation of AI processors is reducing the impedance of the power distribution network between the voltage regulator module (VRM) and the processor. VRM module makers are developing products so that AI processors can transition from lateral to vertical power delivery systems. In a lateral power setup, the VRMs are placed on the side of the GPU. In vertical power delivery, however, the VRMs are on the back of the card under the GPU processor. Vertical power delivery shortens the interconnect, reduces the impedance, and improves efficiency.

Vertical power delivery can provide enough current to reach about 1A/mm². However, reaching over 2A/mm² or more will require embedded voltage regulators and energy storage devices into the package substrate. Many suppliers of passive components are working on better ways to embed these components. The most popular method is to place components inside the layers of the substrate, either by creating small cavities or using over-molding techniques. For example, Murata introduced its iPAS technology, which puts capacitor components inside the organic substrate. Other companies like AVX, Taiyo Yuden, and Samsung offer similar products. The main advantage of this approach is the high energy density.

The other method to embed energy storage components involves creating structures on films with high dielectric or magnetic properties. FICT introduced a decoupling film in 2022. TDK offers a thin film with sputtered dielectric materials on a copper foil. Yageo Kemet has disclosed a novel aluminum polymer film. These films have lower energy storage but have the advantage of being closer to the point of load and can decouple transients more effectively.

Both capacitor components and films are inputs to the package substrate manufacturer who acts as the gatekeeper. But components are easier to substitute. Film materials that have already been specified in the process are "stickier." Organizations can build a bigger moat around integrated films. This is especially true in package substrate applications, where there are incentives to stick with what works. That's why Ajinomoto has been very successful and holds a large share of the market for buildup film materials.

A higher risk but higher reward approach is to vertically integrate across the supply chain. It would require a passive component vendor to integrate into package substrate manufacturing. The integrated organization would supply specialized substrates with integrated energy storage components. Package substrate manufacturing is already specialized and very competitive, so this isn't a strategy for the faint at heart (nor should this be considered a blanket recommendation). However, it has been done before. Murata has successfully used this strategy with its Liquid Crystal Polymer (LCP) technology to create custom RF substrates in its printed circuit business.

Conclusion

Even though Moore's law has slowed, there are plenty of opportunities for the supply chain to innovate in the next generation of AI processors after Blackwell. The roadmaps are well known. However, the current AI market structure makes it hard for new entrants to accrue value. Winning requires innovative technology but also requires organizations to build a moat through products and transactions that deliver intellectual property (IP). One way to do that is to integrate across supply chain levels. It's by no means easy and only compounds the development risks. But it is one of the best ways to move from a low differentiation "value share” to high differentiation "value creation". The supply chain organizations that can invent technology and deliver it in an integrated form factor stand to be the next generation of winners in the AI growth wave.

If you find these posts insightful, subscribe above to receive them by email. If you want to learn more about the consulting practice, or how we can help with your product strategy, contact us at info@rcdadvisors.com.